- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

Earlier this year, Microsoft added a new key to Windows keyboards for the first time since 1994. Before the news dropped, your mind might’ve raced with the possibilities and potential usefulness of a new addition. However, the button ended up being a Copilot launcher button that doesn’t even work in an innovative way.

Logitech announced a new mouse last week. I was disappointed to learn that the most distinct feature of the Logitech Signature AI Edition M750 is a button located south of the scroll wheel. This button is preprogrammed to launch the ChatGPT prompt builder, which Logitech recently added to its peripherals configuration app Options+.

Similarly to Logitech, Nothing is trying to give its customers access to ChatGPT quickly. In this case, access occurs by pinching the device. This month, Nothing announced that it “integrated Nothing earbuds and Nothing OS with ChatGPT to offer users instant access to knowledge directly from the devices they use most, earbuds and smartphones.”

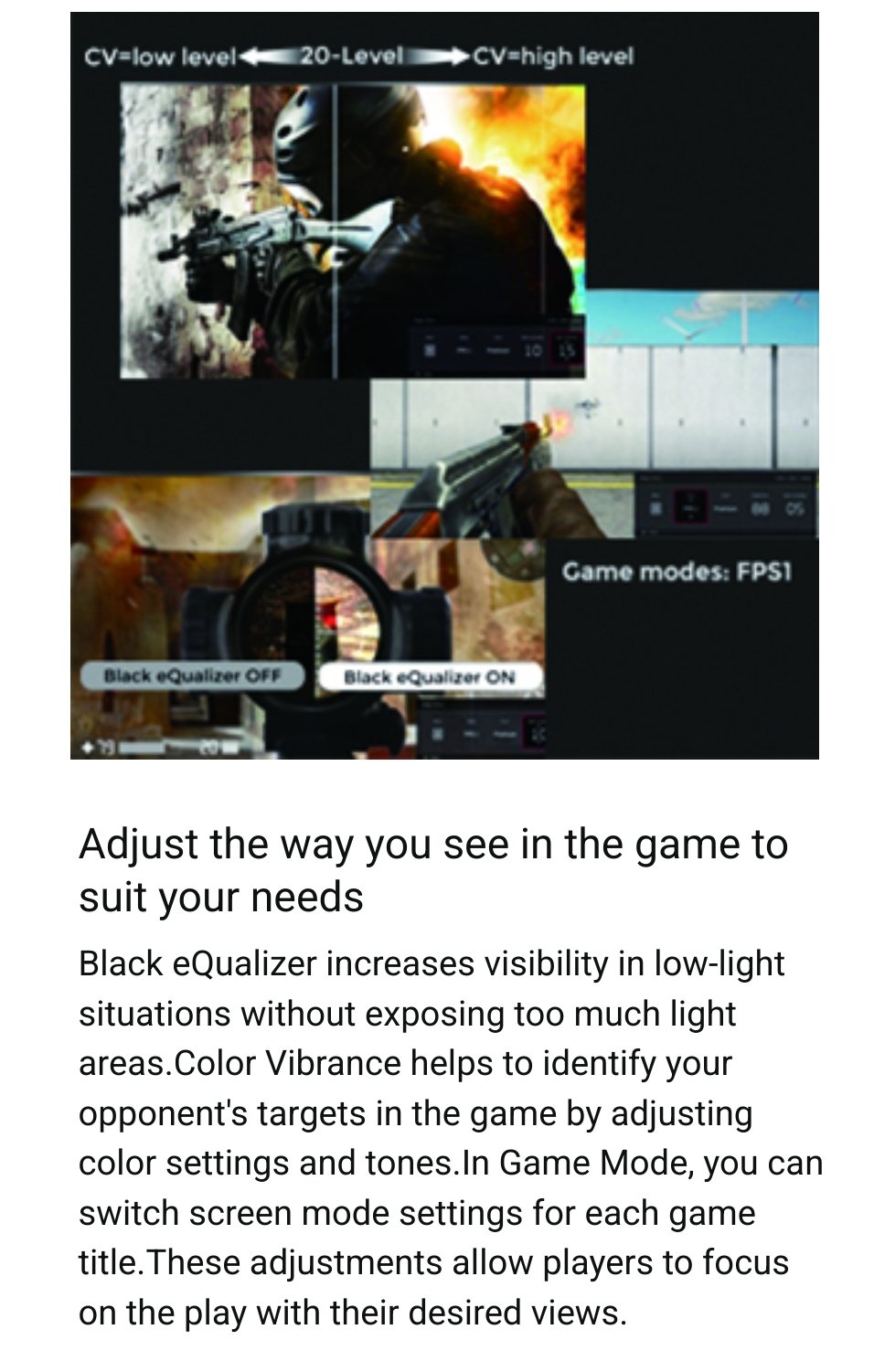

In the gaming world, for example, MSI announced this year a monitor with a built-in NPU and the ability to quickly show League of Legends players when an enemy from outside of their field of view is arriving.

Another example is AI Shark’s vague claims. This year, it announced technology that brands could license in order to make an “AI keyboard,” “AI mouse,” “AI game controller” or “AI headphones.” The products claim to use some unspecified AI tech to learn gaming patterns and adjust accordingly.

Despite my pessimism about the droves of AI marketing hype, if not AI washing, likely to barrage the next couple of years of tech announcements, I have hope that consumer interest and common sense will yield skepticism that stops some of the worst so-called AI gadgets from getting popular or misleading people.

Like when tech companies forced “Cloud” everything upon us in the early 2010’s, and then the digital home assistant craze that followed after.

These things are not meant for us. Sure some people will enjoy or benefit from them in some way, but their primary function is to appease shareholders and investors and drum up cash. It’s why seemingly every company is desperately looking for ways to shoehorn “AI” into their products even if it’s completely nonsensical.

I disagree. I believe that our interactions with AI represent a breakthrough in the level of data surveillance capitalism can obtain from us.

AI isn’t the product. The users are. AI just makes the data that users provide more valuable. Soon enough, every user will be discussing their most personal thoughts and feelings with big brother.

Data collection is theft. Every one of us is being robbed at least $50 per year. That’s how Facebook and Google are worth billions.

Ever play Deus Ex: Invisible War? Yeah, they did that in there. A ubiquitous series of holoprojectors allowing you to seemingly interact with a particular pop star, who is super friendly and easily form a relationship with, turns out to be a sophisticated ai surveillance network doing exactly what you said. It even screws you over if you reveal too much to it.

Yeah strongly agree on companies just using AI as a buzzword to make investors excited. Tech companies that aren’t throwing AI in things have a higher chance of their stock price stagnating

At the time the cloud stuff was first being talked about, I worked a a large tech company (one of the top 10 largest). I remember being told by managers and above how important it was and how we should start thinking about how to integrate it into every facet of our products and workplace. The CEO railed on this as well, tying KPIs to it at all levels of the business. This company makes all kinds products in the realm of hardware and software. Everyone went absolutely apeshit integrating everywhere, including A LOT of places it didn’t belong

“AI” motorcycles are apparently also somehow hot in trade shows. But the entire purpose of getting on a motorcycle is to get out and ride and enjoy the ride yourself.

The cloud buzzword was the dumbest thing ever. The cloud is an infrastructure technique for deploying server resources. It has zero end user impact. It made certain features easier to deploy and develop for software companies, but there is nothing fundamentally different in the experience the cloud provides vs a traditional server. Outside of the industry, the term means fucking nothing to users and the way it was used was just synonymous with the Internet in general. If your file is hosted on the cloud or a centralized server makes no difference to the end user and there would be no way to tell how it was hosted in a UI.

Yeah but it gave us the “cloud to butt” browser extension

They forced cloud on us so they could do the same nickel-and-dime billing that webhosts used for cpu cycles/ram/storage…

…because it’s lucrative as hell when taken to a grand scale.

But there are sometimes side benefits for us.

I, for one, am over the moon levels of happy that I will never spend another weekend patching Exchange servers.

Can I get the list of things that are in my smartphone because we asked for them?

This article should have been titled, “Why the fuck does my mouse need an AI chat -prompt builder?”

Seriously. I want my mouse to do one job - move around the screen and let me click on stuff.

…and if I want “instant access to knowledge”, a Wikipedia bookmark will do the job.

But what if that bookmark had AI? 🤯

Yeah, and there’s nothing stopping people from mapping a button to that if they really want to. Most buttons I’ve been given that do some specific action are buttons I’ve grown to hate because they generally make it more time-consuming to accidentally press it.

Like the windows button, especially back in the day when alt+tabbing out of a game was risky (at the very least it would take a long time as windows paged everything in and out, but it wasn’t rare to also get disconnected from a game or for the game or system to crash entirely) or that fucking bixby button.

For a long time, the first thing I would do on a new computer was go into the registry and disable the windows key.

In the gaming world, for example, MSI announced this year a monitor with a built-in NPU and the ability to quickly show League of Legends players when an enemy from outside of their field of view is arriving.

…So it just lets them cheat? I remember when monitor overlay crosshairs were controversial, this is insane to me.

Yep, there are cheating monitors too and you will never know if other people have it:

lets them cheat

I’ve said in the past that I think that the long run is gonna be that there are two paths to send today’s competitive multiplayer games down as they evolve.

-

Esports. Here, you don’t just want another human as a convenient opponent to make the game fun. You specifically want to test one player against another. You have trusted hardware, and you lock it down to give a relatively-level playing field. Consoles are closer to this than computers; being an open hardware system plays poorly with this. XBox has whitelisted controllers and hardware authentication these days.

It may be that at least a portion of games in this genre optimize for being a good spectator sport, for the enjoyment of the viewer rather then the players, the way professional sports do.

-

Make better game AI as an opponent and make the game single-player. I think that a lot of games are gonna go this route. I mean, multiplayer competitive games are there in large part because we aren’t good at doing good game AI and humans can stand in for that to some degree. But there are a whole host of problems with multiplayer competitive games, and it’s fundamental to the genre. You can’t just pause to deal with real life, like a kid or a phone call (well, not with games with any great number of players, and it’s obnoxious even when there are only a few). Cheating is an issue, breaks enjoyment of other players. Someone has to lose half the time on average, and that’s probably not optimal from a player enjoyment standpoint. Ragequitting is a thing. Humans don’t necessarily all want to stay in-character. Griefing is a thing. Games have to be online. There is some level of pay-to-win in terms of better network connection or computing hardware, and to the extent that there isn’t, you have to restrict players from using what they want. You need a sufficient number of players in your playerbase, and if you don’t get it, your game fails. When your game – inevitably – loses enough players from its playerbase, it no longer is really playable as a game. Setting difficulty is inevitably imperfect, has to rely on what you can do with matchmaking. Optimal play may not be what’s optimally fun for other players, one gets things like camping a spawn point, so you’re always having to structure the game world around linking the two. People who lose may not deal well with it, get upset with other people. I mean, the list goes on. I think that the end game here is making better game AI that’s cheaper to include in a game and requires less expertise to do so. Maybe making generic “AI” engines the way we have graphics or physics engines. Shifting away from multiplayer competitive games towards single-player games with better game AI.

-

The problem is that “AI” doesn’t really entail any concrete technical capabilities, so if the term is seen in a positive light, people will abuse the limits of the definition as far as they are able.

Not really a new phenomenon. Been done in the past as well, with “AI” as well as other things.

With “self-driving cars” being vague – someone could advertise a car that could park itself “self driving” – we introduced newer terms that were linked to actual characteristics, like the SAE level system for vehicle autonomy.

Might need to do something similar with AI.

Spoiler alert: the rice cooking function was analog the whole time.

I think the Sunbeam Radiant Toaster from 1949 had similar AI. Perfect toast every time! Never burnt!

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

I am a simple man, I see a link to a Dankpods video, I upvote it.

Holy shit, Star Trek knew. They were trying to warm us.

To tech Companies and their product engineers: whatever you do, please please please let me rebind the special button to do literally anything other than whatever buzzword you’re forced to push.

You’ll benefit too, every year just slap a new label on the button depending on what’s the hot topic. Personal assistant? ChatGPT? Virtual Reality? Whatever gets your investors’ hearts racing you can rebrand that button to. Just let it be customizable, for the love of the silicon gods.

Product engineers can’t do shit about this. This is all direction from product and upper management because they always have to follow the trends and release something “new and improved”.

Darn we missed the blockchain train. I want a button that mines a bitcoin when I press it.

For some reason this made me imagine a game that is a cross between minecraft and super mario, where whenever you mine or hit a certain kind of block, a little coin pops up and you get a bitcoin from it. Real bitcoin mining!

Keylogger=bad unless it came decipher what you’re doing so Costco can order more diapers or more vegan snacks.

They’re trying to shove it down our throats to normalize the idea of automating creativity.

Can we demand a refund if the ai doesn’t do what we want?

No pay more to try again silly

Can’t wait until the AI enhanced sex toys.

considers

That’d actually probably be a not-unreasonable application for machine learning, if you could figure out some kind of way to measure short-term biological arousal to use as an input. I don’t know if blood pressure or pulse is fast enough. Breathing? Pupil dilation?

Like, you’ve got inputs and outputs that you don’t know the relationship between. You have a limited number of them, so the scale of learning is doable. Weighting of any input in determining an output probably varies somewhat from person to person. It’s probably hard to get weighting data in person. Those are in line with what one would want to try doing machine learning on.

IIRC, vibrators tend to have peak effect somewhere around 200 Hz, but I’d very much be willing to believe that that varies from person to person and situation to situation. If one has an electric motor driving an eccentric cam to produce vibration, as game controllers do for rumble effects, then as long as the motor’s controller supports it, you could probably train that pretty precisely, maybe use some other inputs like length of time running.

I don’t know if it’s possible to have a cam with variable eccentricity – sort of a sliding weight that moves towards or away the outer edge of the cam – but if so, one could decouple vibration frequency and magnitude.

googles

Looks like it exists.

https://www.dmg-lib.org/dmglib/main/imagesViewer_content.jsp?id=16182023&skipSearchBar=1

So that’s an output that’d work with a variety of sex toys.

There’s an open-source layer at buttplug.io – not, despite the name, focusing specifically on butt plugs – that abstracts device control across a collection of sex toys, so learning software doesn’t need to be specific to a given toy, can just treat the specific toy involved as another input.

I’m sure that there’s a variety of auditory and visual stimuli that has different effect from person to person and isn’t generally-optimal today.

And, well, sex sells. So if one can produce something effective, monetizing it probably isn’t incredibly hard, if that’s what one would want to do.

EDIT: Actually, that variable-eccentricity cam is designed to be human- rather than machine-adjusted. That might not be the best design if the aim is to have machine control.

Looking through the hardware compatibility list on buttplug.io, one such device is the “Edge-o-Matic 3000”. This claims to keep a user near orgasm without actually having an orgasm. For that to work, there have to be sensors, and fairly reactive to arousal in the short term. It looks like they’re using a pneumatic pressure sensor driven off a bulb in a user’s butt to measure muscle contractions, and are trying to link that to arousal.

https://maustec.io/collections/sex-tech/products/eom3k?variant=40191648432306

The Edge-o-Matic is a smarter orgasm denial device (for all humans, which also includes men and women) that uses a hollow inflatable butt plug to detect orgasm via muscle contractions in the area. As the user approaches orgasm, these involuntary contractions are recorded and measured to estimate arousal levels and control external stimuli accordingly.

If they’re trying to have software learn to recognize a relationship between muscle contractions and arousal sufficient to produce orgasm, if it’s automatic rather than having someone tweaking variables, that’s machine learning. Maybe “AI” is a bit pretentious, but it’d be a sex toy doing machine learning today.

That’s an interesting idea, but:

-

I’m dubious that it actually works well. It’s described as being a work in progress.

-

Even if it works and solves the problem they’re trying to solve (being able to reliably predict orgasm), I’m not sure that muscle contractions can be used to predict arousal more-broadly.

-

My guess is that as sensors go, mandating that someone have an inflatable bulb up their butt to let the sensor get readings is kind of constraining; not everyone is going to want that at all, much less when they’re, well, playing with sex toys. Their butt might be otherwise-occupied.

That being said, it’s gotta at least be viable enough for someone to have been willing to put work into and commercialize a device based on that input. I’d believe that muscle contractions are an input that one could reasonably derive data from that one could train a machine on.

Maybe one could use brainwaves as an input. That’d avoid physical delay. I’ve got no idea how or if that links to arousal, but I’ve seen inexpensive, noninvasive sensors before that log it. Using biofeedback off those was trendy in the 1970s or something, had people putting out products.

https://en.wikipedia.org/wiki/Electroencephalography

At least according to this paper, sexual arousal does produce a unique signature:

https://link.springer.com/article/10.1007/s10508-019-01547-3

Neuroelectric Correlates of Human Sexuality: A Review and Meta-Analysis

Taken together, our review shows how neuroelectric methods can consistently differentiate sexual arousal from other emotional states.

If it’s primitive enough, probably similar across people, easier to train a meter to measure arousal from EEG data on one set of people that can be used on others.

https://www.sciencedirect.com/science/article/abs/pii/S009130571400032X

There is a remarkable similarity between the cortical EEG changes produced by sexually relevant stimuli in rats and men.

That sounds promising.

There’s an open EEG product at two channels without headband for 99 EUR.

https://www.olimex.com/Products/EEG/OpenEEG/EEG-SMT/open-source-hardware

Some more-end-user-oriented headsets exist.

https://imotions.com/blog/learning/product-guides/eeg-headset-prices/

Hmm. Though psychologists have to have wanted to measure sexual arousal for research. You’d think that if EEGs were the best route, they’d have done that, else physical changes.

https://www.sciencedirect.com/science/article/abs/pii/S2050052115301414

Over the past four decades, there has been a growing interest in the psychophysiological measurement of female sexual arousal. A variety of devices and methodologies have been used to quantify and evaluate sexual response with the ultimate goal of increasing our understanding of the process involved with women’s sexual response, including physiological mechanisms, as well as psychological, social, and biological correlates. The physiological component of sexual response in women is typically quantified by measuring genital change. Increased blood flow to the genital and pelvic region is a marker of sexual arousal, and a number of instruments have been developed to directly or indirectly capture this change [1]. Although the most popular instrument for assessing female sexual response, the vaginal photoplethysmograph (VPP), measures genital response intravaginally, the majority of other instruments focus on capturing sexual response externally, for example, on the labia or clitoris.

Hmm. That’s measuring physical changes, not the brain.

https://en.wikipedia.org/wiki/Vaginal_photoplethysmograph

Vaginal photoplethysmography (VPG, VPP) is a technique using light to measure the amount of blood in the walls of the vagina.

The device that is used is called a vaginal photometer. The device is used to try to obtain an objective measure of a woman’s sexual arousal.There is an overall poor correlation (r = 0.26) between women’s self-reported levels of desire and their VPG readings.[1]

That doesn’t sound like, even concerns about responsiveness in time aside, existing methods for measuring arousal from physical changes in the body are all that great.

As in, maybe measuring the brain is gonna be a better route, if it’s practical.

-

I couldn’t get buttplug.io to work 😒

Lol why wait, Lovense is already touting AI driven patterns.

What are they using as input? Like, you can have software that can control a set of outputs learn what output combinations are good at producing an input.

But you gotta have an input, and looking at their products, I don’t see sensors.

I guess they have smartphone integration, and that’s got sensors, so if they can figure out a way to get useful data on what’s arousing somehow from that, that’d work.

googles

https://techcrunch.com/2023/07/05/lovense-chatgpt-pleasure-companion/?guccounter=1

Launched in beta in the company’s remote control app, the “Advanced Lovense ChatGPT Pleasure Companion” invites you to indulge in juicy and erotic stories that the Companion creates based on your selected topic. Lovers of spicy fan fiction never had it this good, is all I’m saying. Once you’ve picked your topics, the Companion will even voice the story and control your Lovense toy while reading it to you. Probably not entirely what those 1990s marketers had in mind when they coined the word “multimedia,” but we’ll roll with it.

Riding off into the sunset in a galaxy far, far away? It’s got you (un)covered. A sultry Wild West drama featuring six muppets and a tap-dancing octopus? No problem, partner. Finally want to dip into that all-out orgy fantasy you have where you turn into a gingerbread man, and you’re leaning into the crisply baked gingerbread village? Probably . . . we didn’t try. But that’s part of the fun with generative AI: If you can think it, you can experience it.

Of course, all of this is a way for Lovense to sell more of its remote controllable toys. “The higher the intensity of the story, the stronger and faster the toy’s reaction will be,” the company promises.

Hmm.

Okay, so the erotica text generation stuff is legitimately machine learning, but that’s not directly linked to their stuff.

Ditto for LLM-based speech synth, if that’s what they’re doing to generate the voice.

It looks like they’ve got some sort of text classifier to estimate the intensity, how erotic a given passage in the text is, then they just scale up the intensity of the device their software is controlling based on it.

The bit about trying to quantify emotional content of text isn’t new – sentiment analysis is a thing – but I assume that they’re using some existing system to do that, that they aren’t able themselves to train the system further based on how people react to their specific system.

I’m guessing that this is gluing together existing systems that have used machine learning, rather than themselves doing learning. Like, they aren’t learning what the relationship is between the settings on their device in a given situation and human arousal. They’re assuming a simple “people want higher device intensity at more intense portions of the text” relationship, and then using existing systems that were trained as an input.

Lovense is basically just making a line go up and down to raise and lower vibration intensities with AI. They have tons of user generated patterns and probably have some tracking of what people are using through other parts of their app. It’s really not that complicated of an application.

Wait, so we should poison AI data to force AI sex toys to only allow edging?

Filtering out bad data from their training corpus is kinda part of the work that any company doing machine learning is gonna have to deal with, sex toy or no.

Meta put AI search into instgram search. And you can’t do any search without agreeing to use AI search. No option to opt out

Been going on for awhile with microchips and network access and now this as the latest. My fridge just needs to keep things cold. clock on a microwave? fine. it does not need to know the exact date. etc, etc.

its like no one’s seen predictive text or denoising before.

Got damn robot I ain’t got no tree FIDDY

This article is a bit late?

Who buys a new peripherals? You can get 100% functional peripherals for a couple of bucks from any thrift store.

Who? I dunno, about 98% of users or so?

Why?

I’m guessing to get a new product, guaranteed to work, be compatible with other current equipment, software etc. Also easy to just order online without having to go find a unit in acceptable condition for a good price.

the people supplying the thrift stores with used peripherals