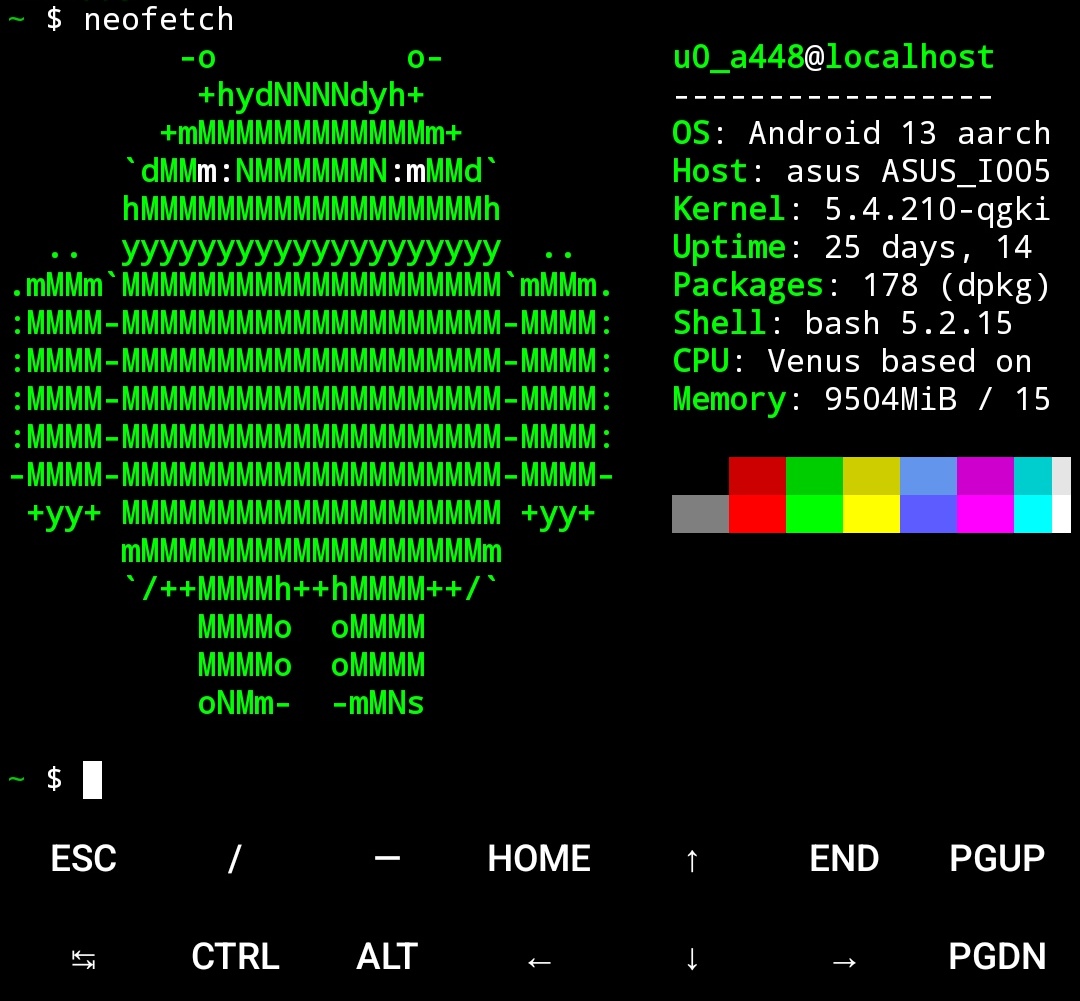

Not at my PC so this is the best I’ve got

Not at my PC so this is the best I’ve got

Not to throw an unwanted suggestion but would an OVHCloud eco server work for this?

I’m not an expert in any way but I’ve had no issues with my instance and it was a lot cheaper than anything else I could find.

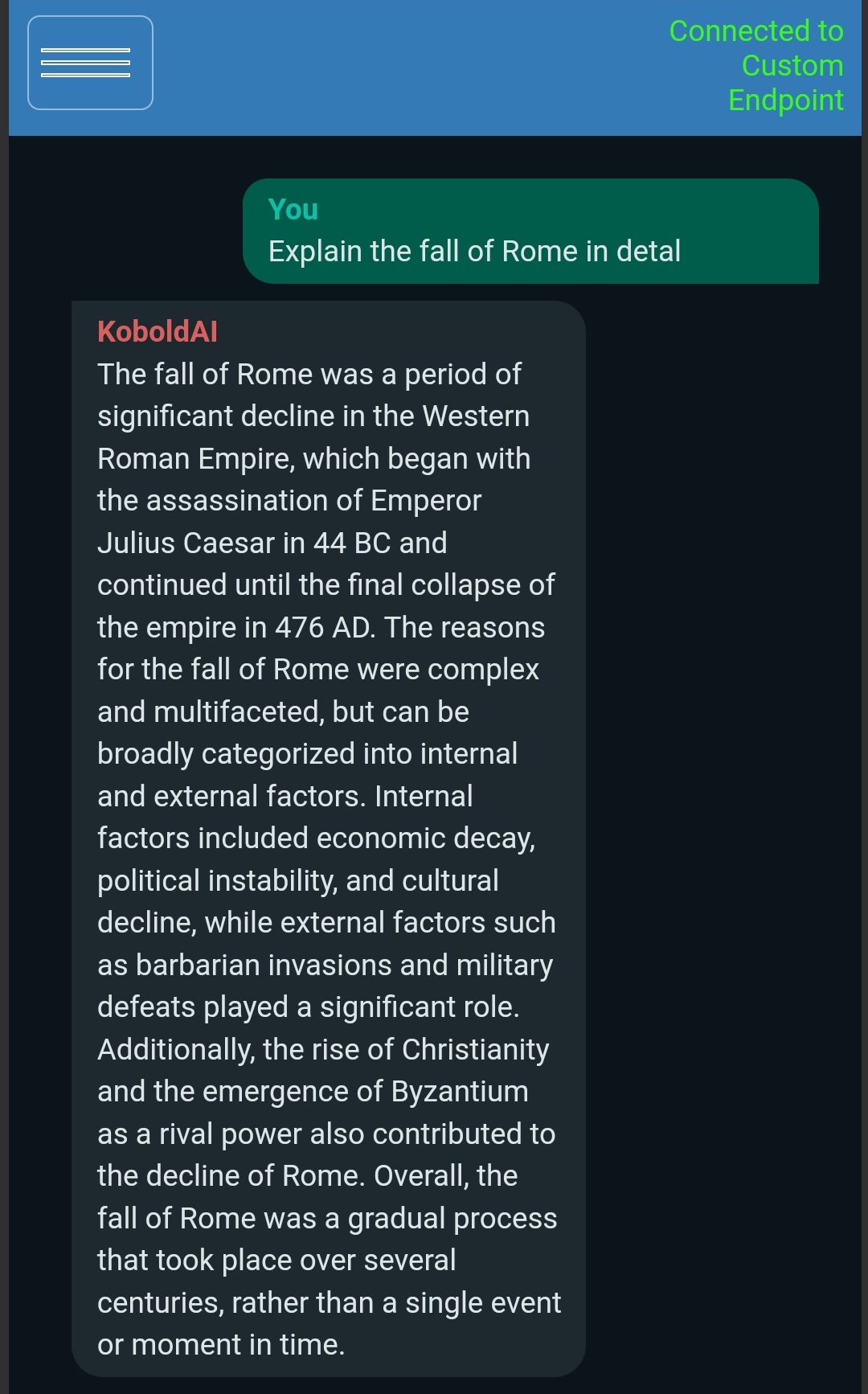

It’s Wizard-Vicuna-7B-Uncensored-GGML

Been running it on my phone through Koboldcpp

I’m currently working on a discord bot, it’s still a major work on progress though.

It’s a rewrite of a bot I made a few months ago in Python but I wasn’t getting the control I needed with the libraries available and based on my current testing, this rewrite is what I needed

It uses ML to generate text replies (currently using ChatGPT) and images (currently using DALL-E and Stable diffusion), I’ve got the text generation working, I just need yo get image generation working now.

Link to the github: https://github.com/2haloes/Delta-bot-rusty

Link to the original bot (has the env variables that need to be set): https://github.com/2haloes/Delta-Discord-Bot

196 isn’t a place, it’s a people

Congrats to Iceland!

My personal favorite is Timesplitters 2 with Timesplitters Future Perfect very close behind

Can’t beat a good GoldenEye/Perfect Dark style shooter

According to the Apollo dev, they were taking in £500,000 a year ($10 from 50,000 subscriptions). I don’t know if anyone else has revealed any figures

I tried running this with some output from a Wizard-Vicuna-7B-Uncensored model and it returned

('Human', 0.06035491254523517)

So I don’t think that this hits the mark, to be fair, I got it to generate something really dumb but a perfect LLM detection tool will likely never exist.

Good thing is that it didn’t false positive my own words.

Below is the output of my LLM, there’s a decent amount of swearing so heads up

Edit:

Tried with a more sensible question and still got a false negative

('Human', 0.03917657845587952)

If this is real then I can’t wait for it!

Hopefully it ends up being the complete edition (Portable content and The Answer included)

Edit: This is real and was accidently uploaded too early https://personacentral.com/persona-3-reload-announced/

I’ve been using uncensored models in Koboldcpp to generate whatever I want but you’d need the RAM to run the models.

I generated this using Wizard-Vicuna-7B-Uncensored-GGML but I’d suggest using at least the 13B version

It’s a basic reply but it’s not refusing