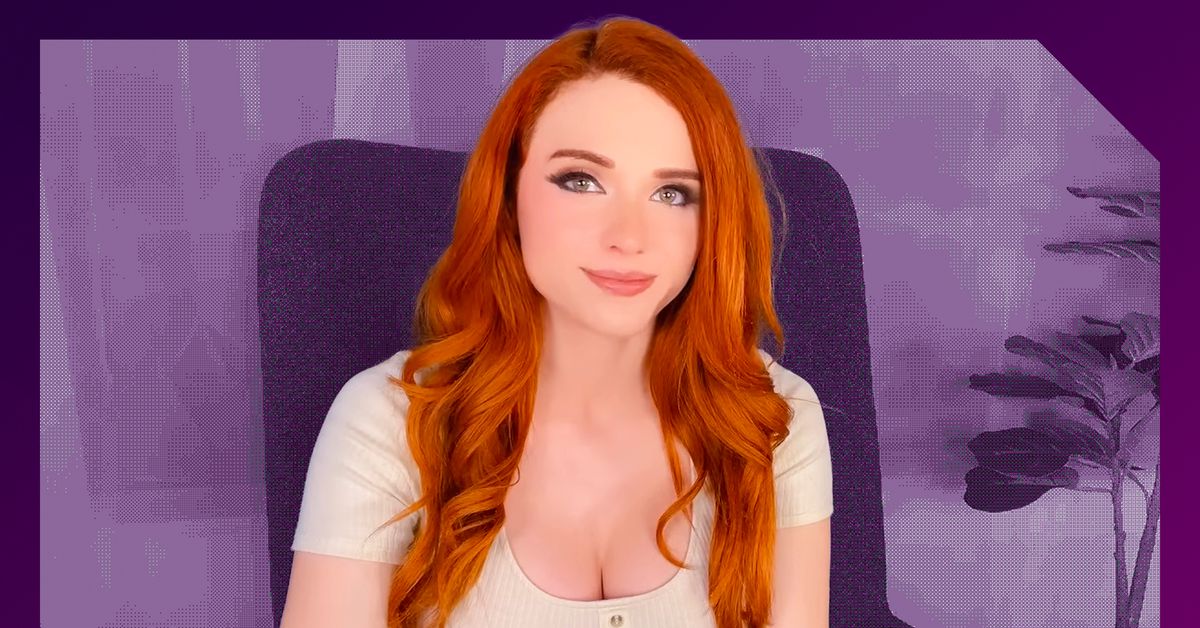

there is… a lot going on here–and it’s part of a broader trend which is probably not for the better and speaks to some deeper-seated issues we currently have in society. a choice moment from the article here on another influencer doing a similar thing earlier this year, and how that went:

Siragusa isn’t the first influencer to create a voice-prompted AI chatbot using her likeness. The first would be Caryn Marjorie, a 23-year-old Snapchat creator, who has more than 1.8 million followers. CarynAI is trained on a combination of OpenAI’s ChatGPT-4 and some 2,000 hours of her now-deleted YouTube content, according to Fortune. On May 11, when CarynAI launched, Marjorie tweeted that the app would “cure loneliness.” She’d also told Fortune that the AI chatbot was not meant to make sexual advances. But on the day of its release, users commented on the AI’s tendency to bring up sexually explicit content. “The AI was not programmed to do this and has seemed to go rogue,” Marjorie told Insider, adding that her team was “working around the clock to prevent this from happening again.”

I don’t really like the logic of “chatbots like this will help cure loneliness.” It might help someone feel less lonely at first. But then it’ll be a crutch and, if anything, hurt people’s ability to socialize with other real people. Like it’s a quick dopamine hit that will slowly dig you deeper into the hole you feel you’re in.

Adding on to your comment, I read a really interesting study recently that suggests that interacting with AI engages the social parts of our brains but does not provide the same stimuli/feedback as interacting with a real person leading to increased loneliness and thus increased alcohol abuse and insomnia.

I was wondering. Seemingly, it could teach interaction skills as long as the bot wasn’t abnormally accomindating. Maybe it works for some and not others. So, it could be a therapy option. Just needs to be monitored for whether the person uses it to hurt themselves.

Fascinating, do you happen to have a link? No worries if it’s buried or you don’t remember, but I’d love to read it

Sure thing, here ya go!

Apologies for the extremely late reply but thank you so much for this fascinating article!

It is like any of these people have not seen any sci-fi released over the last 50 years.