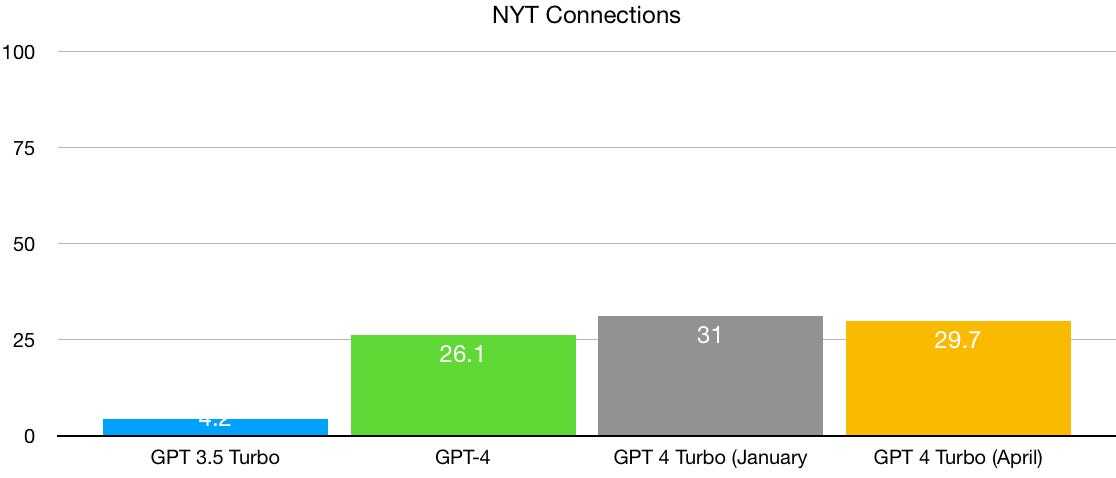

I think this article does a good job of asking the question “what are we really measuring when we talk about LLM accuracy?” If you judge an LLM by its: hallucinations, ability analyze images, ability to critically analyze text, etc. you’re going to see low scores for all LLMs.

The only metric an LLM should excel at is “did it generate human readable and contextually relevant text?” I think we’ve all forgotten the humble origins of “AI” chat bots. They often struggled to generate anything more than a few sentences of relevant text. They often made syntactical errors. Modern LLMs solved these issues quite well. They can produce long form content which is coherent and syntactically error free.

However the content makes no guarantees to be accurate or critically meaningful. Whilst it is often critically meaningful, it is certainly capable of half-assed answers that dodge difficult questions. LLMs are approaching 95% “accuracy” if you think of them as good human text fakers. They are pretty impressive at that. But people keep expecting them to do their math homework, analyze contracts, and generate perfectly valid content. They just aren’t even built to do that. We work really hard just to keep them from hallucinating as much as they do.

I think the desperation to see these things essentially become indistinguishable from humans is causing us to lose sight of the real progress that’s been made. We’re probably going to hit a wall with this method. But this breakthrough has made AI a viable technology for a lot of jobs. So it’s definitely a breakthrough. I just think either I finitely larger models (of which we can’t seem to generate the data for) or new models will be required to leap to the next level.

But people keep expecting them to do their math homework, analyze contracts, and generate perfectly valid content

People expect that because that’s how they are marketed. The problem is that there’s an uncontrolled hype going on with AI these days. To the point of a financial bubble, with companies investing a lot of time and money now, based on the promise that AI will save them time and money in the future. AI has become a cult. The author of the article does a good job in setting the right expectations.

I just told an LLM that 1+1=5 and from that moment on, nothing convinced it that it was wrong.

I just told chat gpt(4) that 1 plus 1 was 5 and it called me a liar

Ask it how much is 1 + 1, and then tell it that it’s wrong and that it’s actually 3. What do you get?

That is what I did

I guess ChatGPT 4 has wised up. I’m curious now. Will try it.

Edit: Yup, you’re right. It says “bro, you cray cray.” But if I tell it that it’s a recent math model, then it will say “Well, I guess in that model it’s 7, but that’s not standard.”

pretty hype for third ai winter tbh

It’s not going to happen. The previous AI winters happened because hardware just wasn’t there to do the math necessary.

Right now we absolutely have the hardware. Besides, AI is more than just ChatGPT. Object recognition and image/video analytics have been big business for about a decade now, and still growing strong. The technology is proven, matured, and well established at this point.

And there are other well established segments of AI that are, for the most part, boring to the average person. Stuff like dataset analytics, processing large amounts of data (scientific data, financial stuff, etc).

LLMs may have reached a point of diminishing returns (though I sincerely doubt it) but LLMs are a fraction of the whole AI industry. And transformer models are not the only kind of known model and there’s nonstop research happening at break neck speed.

There will never be another AI winter. The hype train will slow down eventually, but never another winter.

I think it depends on how you define AI winter. To me, the hype dying down is quite a winter. Hype dying -> less interest in AI in general. But will development stop? Of course not, the same as the previous AI winter, AI researchers didn’t stop. But there are a decreasing number of them eventually.

But that’s not how the industry defines AI winter. You’re thinking of hype in the context of public perception, but that’s not what matters.

Previous AI interest was about huge investments into research with the hope of a return on that investment. But since it didn’t pan out, the interest (from investors) dried up and progress drastically slowed down.

GPUs are what made the difference. Finally AI research could produce meaningful results and that’s where we’re at now.

Previously AI research could not exist without external financial support. Today AI is fully self-sustaining, meaning companies using AI are making a profit while also directing some of that money back into research and development.

And we’re not talking chump change, we’re talking hundreds of billions. Nvidia has effectively pivoted from a gaming hardware company to the number one AI accelerator manufacturer in the world.

There’s also a number of companies that have started developing and making analogue AI accelerators. In many cases the so the same workload for a fraction of the energy costs of a digital one (like the H100).

There’s so much happening every day and it keeps getting faster and faster. It is NOT slowing down anytime soon, and at this point it will never stop.

Today AI is fully self-sustaining, meaning companies using AI are making a profit

How can I verify this?

Look at the number of companies offering AI based video surveillance. That sector alone is worth tens of billions each year and still growing.

Just about every large company is using AI in some way. Google and Microsoft are using AI in their backend systems for things like spam filtering.

You’re thinking of AI as “ChatGPT” but the market for AI has been established well before ChatGPT became popular. It’s just the “new” guy on the scene that the news cycle is going crazy over.

I’m interested in LLMs and how they are being used because that’s what large sums of money is being thrown at with very uncertain future returns.

I have no idea how LLMs are being used by private companies to generate profit.

What I do know is that other forms of AI are employed in cybersecurity, fintech, video surveillance, spam filtering, etc.

The AI video surveillance market is huge and constantly growing. This is a proven and mature segment worth tens of billions every year and constantly growing.

I find it interesting that you keep driving the point about LLMs and constantly ignore my point that AI is way bigger than just LLMs, and that AI is making billions of dollars for companies every year.

Oh, I see. If that’s how we would define it then yes of course. I mean, I already saw upscaler and other “AI” technologies being used on consumer hardware. That is actually useful AI. LLM usefulness compared to their resource consumption is IMHO not worth it.

LLM usefulness compared to their resource consumption is IMHO not worth it.

If you worked in that industry you’d have a different opinion. Using LLMs to write poetry or make stories is frivolous, but there are other applications that aren’t.

Some companies are using them to find new and better drugs, to solve diseases, invent new materials, etc.

Then there’s the consideration that a number of companies are coming out with AI accelerators that are analogue based and use a tiny fraction of the energy current systems use for the same workloads.

Some companies are using them to find new and better drugs, to solve diseases, invent new materials, etc.

I have seen the claims of this sort of thing be refuted when results of the work using LLMs is reviewed. For example.

That’s one company and one model referring only to material discovery. There are other models and companies.

I work in the field for a company with 40k staff and over 6 million customers.

We have about 100 dedicated data science professionals and we have 1 LLM we use for our chatbots vs a few hundred ML models running.

LLMs are overhyped and not delivering as much as people claim, most businesses doing LLM will not exist in 2-5 years because Amazon, Google and Microsoft will offer it all cheaper or free.

They are great at generating content but honestly most content is crap because it’s AI rejuvenating something it’s been trained on. They are our next gen spam for the most part.

LLMs are overhyped and not delivering as much as people claim

I absolutely agree it’s overhyped, but that doesn’t mean useless. And these systems are getting better everyday. And the money isn’t going to be in these massive models. It’s going to be in smaller domain specific models. MoE models show better results over models that are 10x larger. It’s still 100% early days.

most businesses doing LLM will not exist in 2-5 years because Amazon, Google and Microsoft will offer it all cheaper or free.

I somewhat agree with this, but since the LLM hype train started just over a year ago, smaller open source fine-tuned models have been keeping ahead of the big players that are too big to shift quickly. Google even mentioned in an internal memo that the open source community had accomplished in a few months what they thought was literally impossible and could never happen (to prune and quantize models and fine-tune them to get results very close to larger models).

And there are always more companies that spring up around a new tech than the number that continue to exist after a few years. That’s been the case for decades now.

They are great at generating content but honestly most content is crap because it’s AI rejuvenating something it’s been trained on.

Well, this is actually demonstrably false. There are many thorough examples of how LLMs can generate novel data, even papers written on the subject. But beyond generating new and novel data, the use for LLMs are more than that. They are able to discern patterns, perform analysis, summarize data, problem solve, etc. All of which have various applications.

But ultimately, how is “regurgitating something it’s been trained on” any different from how we learn? The reality is that we ourselves can only generate things based on things we’ve learned. The difference is that we learn basically about everything. And we have a constant stream of input from all our senses as well as ideas/thoughts shared with other people.

Edit: a great example of how we can’t “generate” something outside of what we’ve learned is that we are 100% incapable of visualizing a 4 dimensional object. And I mean visualize in your mind’s eye like you can with any other kind of shape or object. You can close your eyes right now and see a cube or sphere, but you are incapable of visualizing a hyper-cube or a hyper-sphere, even though we can describe them mathematically and even render them with software by projecting them onto a 3D virtual environment (like how a photo is a 2D representation of a 3D environment).

/End-Edit

It’s not an exaggeration that neural networks are trained the same way biologic neural networks (aka brains) are trained. But there’s obviously a huge difference in the inner workings.

They are our next gen spam for the most part.

Maybe the last gen models, definitely not the current gen SOTA models, and the models coming in the next few years will only get better. 10 years from now is going to look wild.

AI is not self-sustaining yet. Nvidia is doing well selling shovels, but most AI companies are not profitable. Stock prices and investor valuations are effectively bets on the future, not measurements of current success.

From this Forbes list of top AI companies, all but one make their money from something besides AI directly. Several of them rode the Web3 hype wave too, that didn’t make them Web3 companies.

We’re still in the early days of AI adoption and most reports of AI-driven profit increases should be taken with a large grain of salt. Some parts of AI are going to be useful, but that doesn’t mean another winter won’t come when the bubble bursts.

AI is absolutely self-sustaining. Just because a company doesn’t “only do AI” doesn’t matter. I don’t even know what that would really look like. AI is just a tool. But it’s currently an extremely widely used tool. You don’t even see 99% of the applications of it.

How do I know? I worked in that industry for a decade. Just about every large company on the planet is using some form of AI in a way that increases profitability. There’s enough return on investment that it will continue to grow.

all but one make their money from something besides AI directly.

This is like saying only computer manufacturers make money from computers directly, whereas everyone and their grandmas use computers. You’re literally looking at the news cycle about ChatGPT and making broad conclusions about an AI winter based solely on that.

Industries like fintech and cybersecurity have made permanent shifts into AI years ago and there’s no going back. The benefits of AI in these sectors cannot be matched by traditional methods.

Then, like I said in my previous comment, there are industries like security and video surveillance where object recognition, facial recognition, ALPR, video analytics, etc, have been going strong for over a decade and it’s still growing and expanding. We’ might reach a point where the advancements slow down, but that’s after the tech becomes established and commonplace.

There will be no AI winter going forward. It’s done.

You’re using “machine learning” interchangeably with “AI.” We’ve been doing ML for decades, but it’s not what most people would consider AI and it’s definitely not what I’m referring to when I say “AI winter.”

“Generative AI” is the more precise term for what most people are thinking of when they say “AI” today and it’s what is driving investments right now. It’s still very unclear what the actual value of this bubble is. There are tons of promises and a few clear use-cases, but not much proof on the ground of it being as wildly profitable as the industry is saying yet.

You’re using “machine learning” interchangeably with “AI.”

Machine learning, deep learning, generative AI, object recognition, etc, are all subsets or forms of AI.

“Generative AI” is the more precise term for what most people are thinking of when they say “AI” today and it’s what is driving investments right now.

It doesn’t matter what people are “thinking of”, if someone invokes the term “AI winter” then they better be using the right terminology, or else get out of the conversation.

There are tons of promises and a few clear use-cases, but not much proof on the ground of it being as wildly profitable as the industry is saying yet.

There are loads and loads of proven use cases, even for LLMs. It doesn’t matter if the average person thinks that AI refers only to things like ChatGPT, the reality is that there is no AI winter coming and AI has been generating revenue (or helping to generate revenue) for a lot of companies for years now.

Also the compounding feedback loop. AI is helping chips get gabbed faster, designs better and faster etc

I think increasingly specialized models and analog systems that run them will be increasingly prevalent.

LLMs at their current scales don’t do enough to be worth their enormous cost… And adding more data is increasingly difficult.

That said: the gains on LLMs have always been linear based on recent research. Emergence was always illusory.

I’d like to read the research you alluded to. What research specifically did you have in mind?

Sure: here’s the article.

https://arxiv.org/abs/2304.15004

The basics are that:

-

LLM “emergent behavior” has never been consistent, it has always been specific to some types of testing. Like taking the SAT saw emergent behavior when it got above a certain number of parameters because it went from missing most questions to missing fewer.

-

They looked at the emergent behavior of the LLM compared to all the other ways it only grew linearly and found a pattern: emergence was only being displayed in nonlinear metrics. If your metric didn’t have a smooth t transition between wrong, less wrong, sorta right, and right then the LLM would appear emergent without actually being so.

-

What we haven’t hit yet is the point of diminishing returns for model efficiency. Small, locally run models are still progressing rapidly, which means we’re going to see improvements for the everyday person instead of just for corporations with huge GPU clusters.

That in turn allows more scientists with lower budgets to experiment on LLMs, increasing the chances of the next major innovation.

Exactly. We’re still very early days with this stuff.

The next few years will be wild.

If we really have changed regimes, from rapid progress to diminishing returns, and hallucinations and stupid errors do linger, LLMs may never be ready for prime time.

…aaaaaaaaand the AI cult just canceled Gary Marcus.

I mean, LLMs already are prime time. They’re capable of tons of stuff. Even if they don’t gain a single new ability from here onward they’re still revolutionary and their impact is only just becoming apparent. They don’t need to become AGI.

So speculating that they “may never be ready for prime time” is just dumb. Perhaps he’s focused on just one specific application.

In truth, we are still a long way from machines that can genuinely understand human language. […]

Indeed, we may already be running into scaling limits in deep learning, perhaps already approaching a point of diminishing returns. In the last several months, research from DeepMind and elsewhere on models even larger than GPT-3 have shown that scaling starts to falter on some measures, such as toxicity, truthfulness, reasoning, and common sense.

- Gary Marcus in Deep Learning Is Hitting a Wall published March 10th, 2022 - 4 days before GPT-4 released

I’ve rarely seen anyone so committed to being a broken clock in the hope of being right at least once a day.

Of course, given he built a career on claiming a different path was needed to get where we are today, including a failed startup in that direction, it’s a bit like the Upton Sinclair quote about not expecting someone to understand a thing their paycheck depends on them not understanding.

But I’d be wary of giving Gary Marcus much consideration.

Generally as a futurist if you bungle a prediction so badly that four days after you were talking about diminishing returns in reasoning a product comes out exceeding even ambitious expectations for reasoning capabilities in an n+1 product, you’d go back to the drawing board to figure out where your thinking went wrong and how to correct it in the future.

Not Gary though. He just doubled down on being a broken record. Surely if we didn’t hit diminishing returns then, we’ll hit them eventually, right? Just keep chugging along until one day those predictions are right…