There are some explanations here, but I can’t follow any of them.

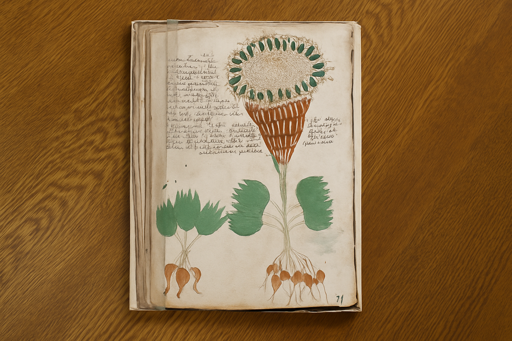

First you start with a lace pattern: make red and white strands of glass, and twist them together. Like this, but imagine it’s hot glass (not too hot to be liquid, but hot enough to be malleable):

Then do the same with blue and white. Finally, lay them side by side and heat it up until they fuse together. You’ll get a “sheet” of checkerboard glass; now all you need to do is to gently heat it up just enough to shape it into a plate.

If I were in charge of the defence department of a government, there’s no way I’d ever allow closed source software to even touch machines with sensitive data. Or even pre-compiled software. Because the problem is not what you see, like personnel; it’s what you don’t, like bribery and backdoors.

Let alone use “cloud” computing. Come on… it’s someone else’s computer.