How can anyone hate someone so much, when she never did anything to them?

Imagine that your life is terrible. You’re poor, desperate, maybe ill too. You live with your parents who are also poor and desperate, and the lot of you self medicate with copious amounts of alcohol.

You’re miserable, and not terribly bright. Then one day, your primary media sources and even the governing political party starts parroting the same lie that the reason your life if shit is because trans people and immigrants exist. No one with a similarly large platform is telling you the truth.

Now consider how many millions of purple have been abandoned by the state in this country. How many poor and desperate people we’ve made in the last 10 years and the incentive the owning class has in laying the blame for that at the feet of anyone but themselves.

Hate is learnt, and the teachers are holding all the money & power.

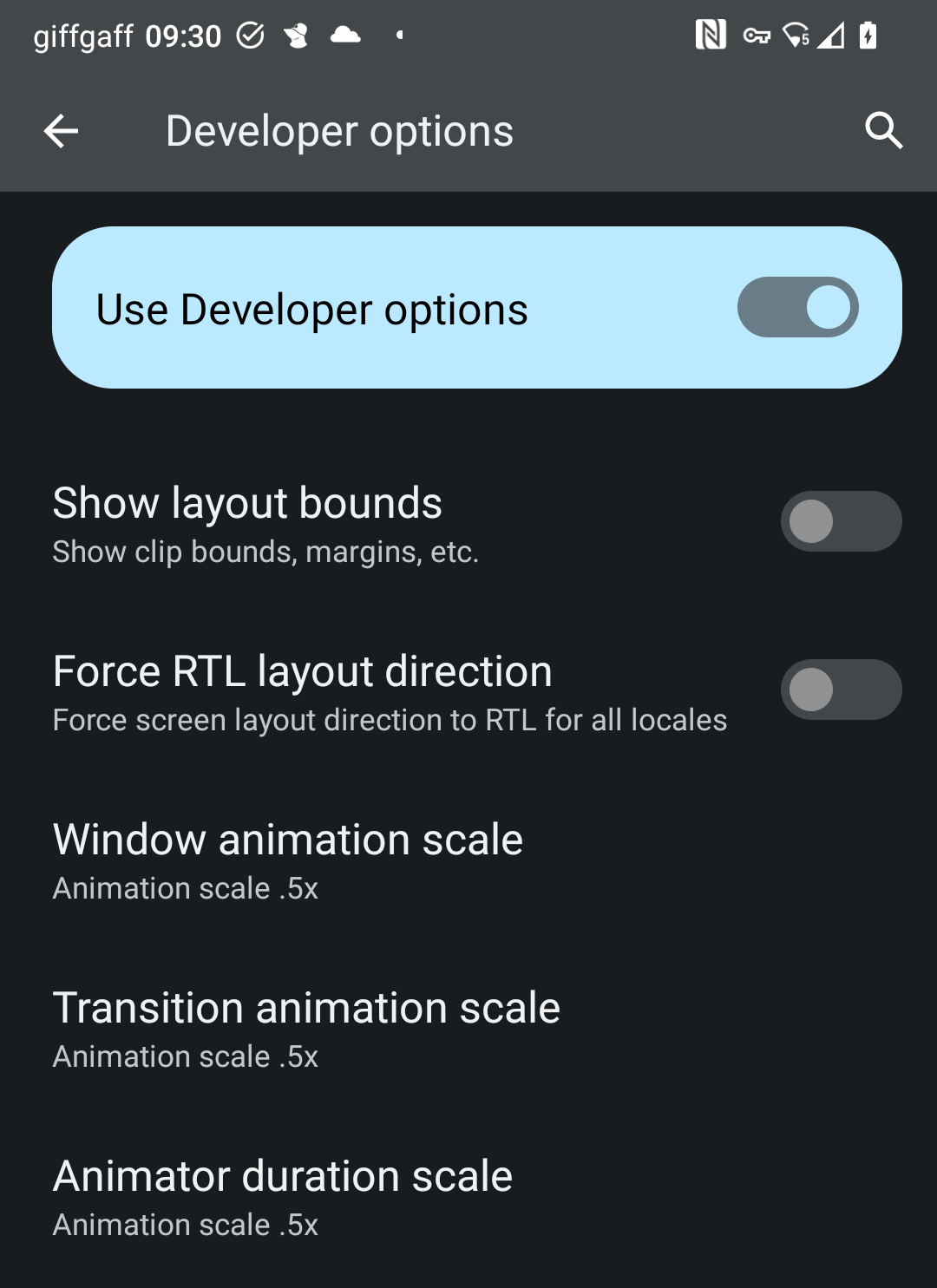

Please don’t give this any credit. Nonsense like this is already being used to filter web form submissions for things like job applications.

Source: I applied for a role at a medium-sized company a couple weeks ago and was auto-rejected because my cover letter appeared to be AI-generated. I clicked “back”, removed an em-dash, and the form was accepted.