- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

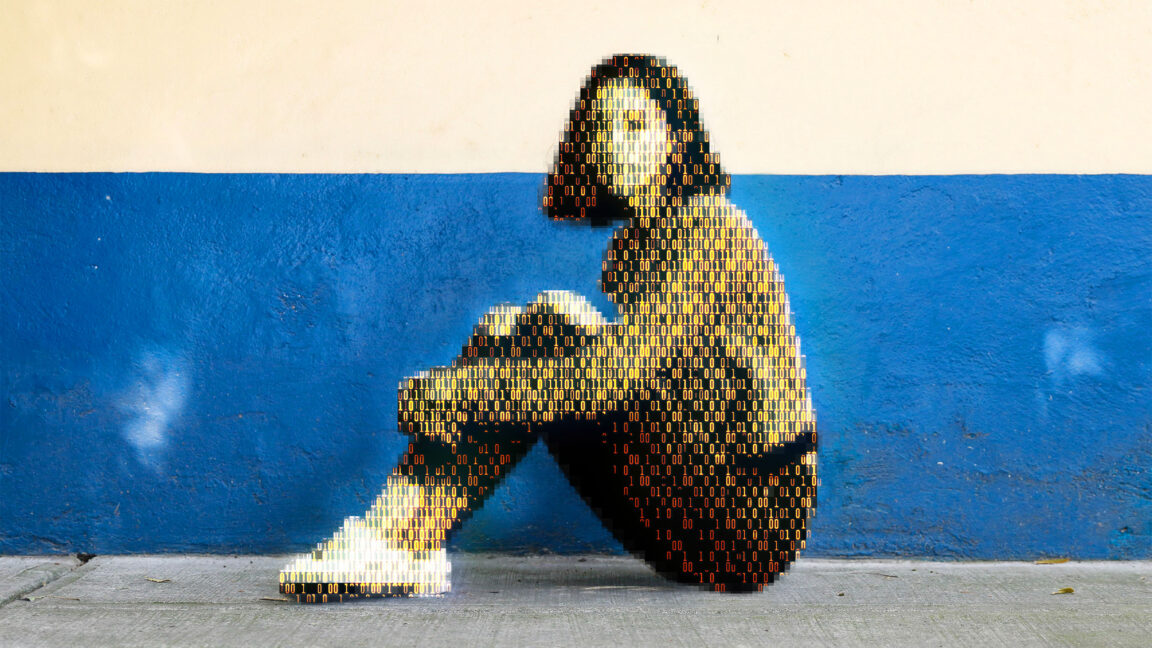

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an AI model designed to flag unknown CSAM at upload. It’s the earliest AI technology striving to expose unreported CSAM at scale.

https://en.m.wikipedia.org/wiki/False_positives_and_false_negatives

Not that I think you will understand. I’m posting this mostly for those moronic enough to read your comments and think “that seems reasonable”

Thanks