- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

As part of the memory management changes expected to be merged for the upcoming Linux 6.11 cycle is allowing more fine-tuned control over the swappiness setting used to determine how aggressively pages are swapped out of physical system memory and into the on-disk swap space.

With the new code from Meta, a swappiness argument is supported for memory.reclaim. This effectively allows more finer-grained control over the swapiness behavior without overriding the global swappiness setting.

TL;DR - We can now control swappiness per cgroup instead of just globally. This is something that userspace oom killers will want to use.

I forced a shutdown of my Linux server by holding down the power button last night after it had been thrashing the harddisk for I don’t know how long.

Wouldn’t respond to SSH so I just gave up, guess I could have tried to plug in a keyboard and use some magic keys.

Maybe I should just remove the swapfile and let it kill something before it gets to that state. Or is that what swappiness setting is supposed to prevent? Only swap out stuff that is not actively used? In any case defaults don’t seem to work very well for me.

Guess I’ll have to go boot it again today and try to find out what went wrong from the logs.

Look into

earlyoomorsystemd-oomd, the kernel out-of-memory killer will only start killing processes way after it should be. It will happily deadlock itself in a memory swap loop before considering killing any process.There are a lot of other ways to fine tune the kernel to prevent this, but it’s a good starting point to prevent your system from freezing. Just keep in mind it will kill processes when memory is running out until enough memory is available.

Thanks, will have a look for next time , too bad those are not the defaults.

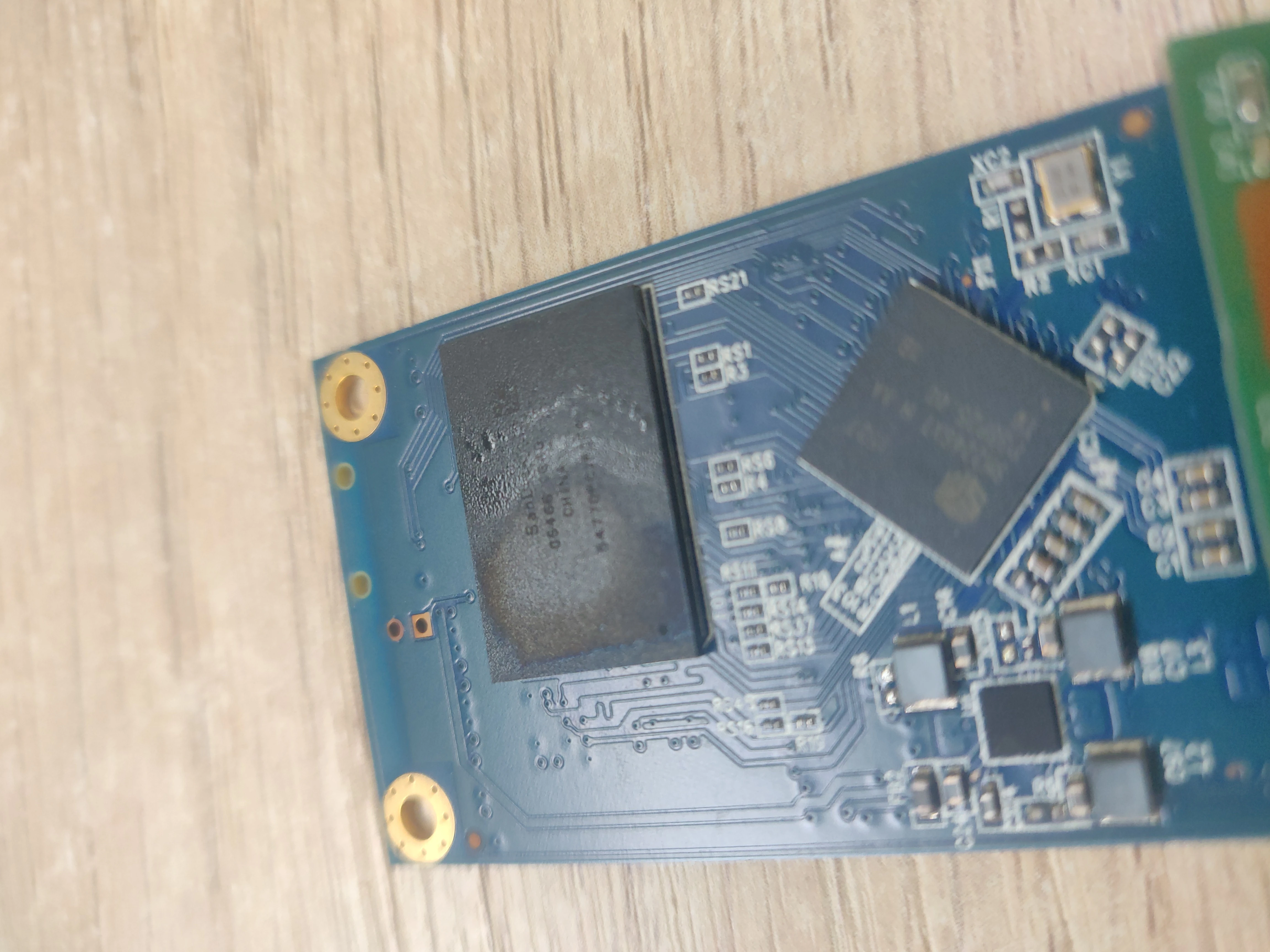

For now it seems my ssd is fried (even though swap wasn’t on that disk) , lots of I/O Errors and a suspiciously toasted looking chip

Ouch, hope you can get that sorted out. A broken disk my also “deadlock” the system when binaries it tries to start are on that disk and no longer in cache, e.g. sshd or your shell.

In my experience when only ping sporadically works it’s an OOM issue, if the ssh login fails weirdly it can also be an I/O issue. If your network is working as expected obviously.

Neat, but I’d really like it to just handle memory properly without me having to tweak swap and OOM settings at all. Windows and Mac can do it. Why can’t Linux? I have 32GB of RAM and some more zswap and it still regularly runs out of RAM and hard resets. Meanwhile my 16GB Windows machine from 2012 literally never has problems.

I wonder why there’s such a big difference. I guess Windows doesn’t have over-commit which probably helps apps like browsers know when to kick tabs out of memory (the biggest offender on Linux for me is having lots of tabs open in Firefox), and Windows doesn’t ignore the existence of GUIs like Linux does so maybe it makes better decisions about which processes to move to swap… but it feels like there must be something more?

Other parts of your Linux OS can now interact with these tools without you having to do it manually.

This is the best summary I could come up with:

As part of the memory management changes expected to be merged for the upcoming Linux 6.11 cycle is allowing more fine-tuned control over the swappiness setting used to determine how aggressively pages are swapped out of physical system memory and into the on-disk swap space.

This effectively allows more finer-grained control over the swapiness behavior without overriding the global swappiness setting.

Dan Schatzberg of Meta explains in the patch adding swappiness= support to memory.reclaim: Allow proactive reclaimers to submit an additional swappiness=[val] argument to memory.reclaim.

However, proactive reclaim runs continuously and so its impact on SSD write endurance is more significant.

Therefore, it’s desireable to have proactive reclaim reduce or stop swap-out before the threshold at which OOM killing occurs.

This has been in production for nearly two years and has addressed our needs to control proactive vs reactive reclaim behavior but is still not ideal for a number of reasons:

The original article contains 474 words, the summary contains 151 words. Saved 68%. I’m a bot and I’m open source!

Don’t get me wrong; I love this. This is fantastic. However, I have only one thing to say: mhwahahahahahhaa!

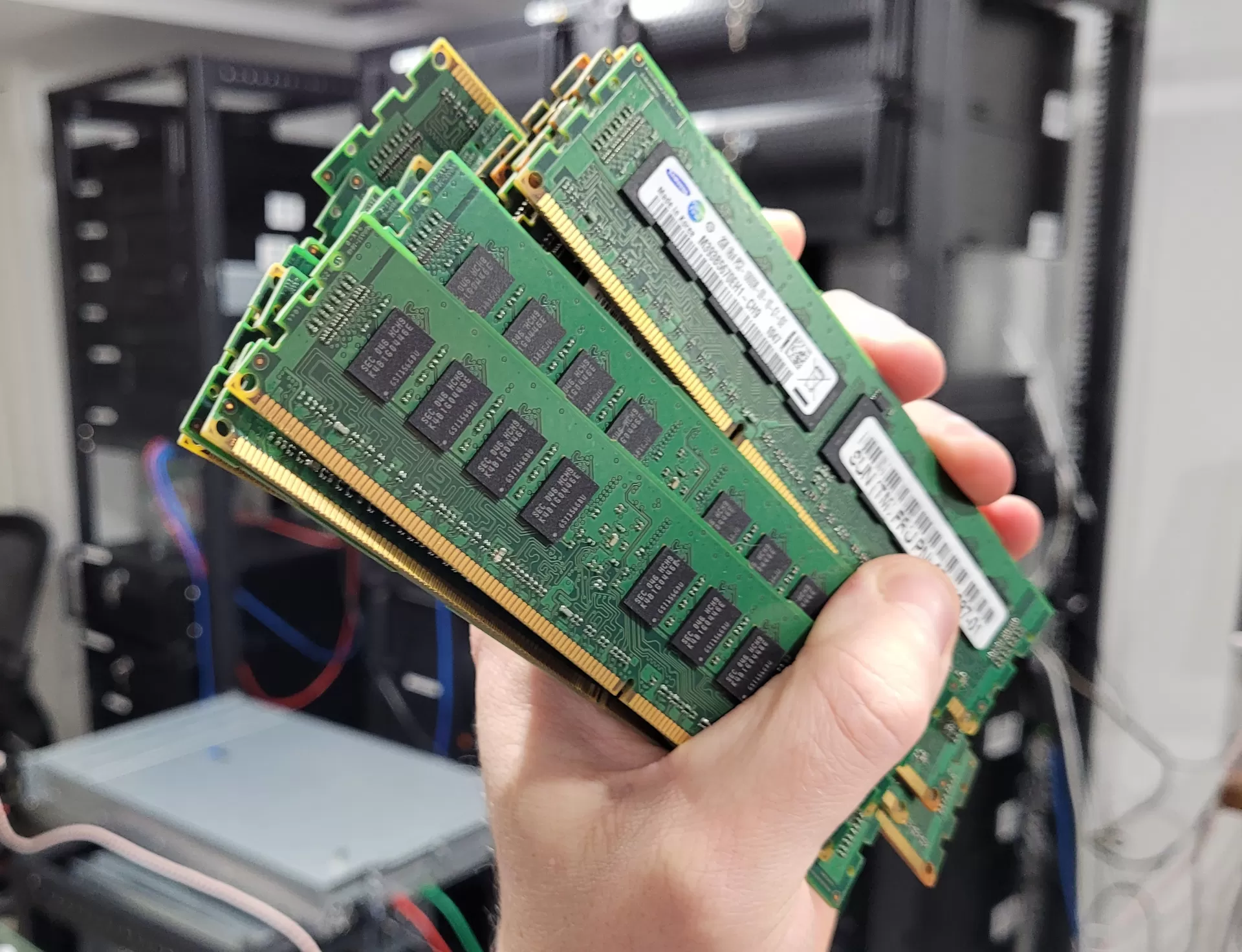

The last time I upgraded my desktop computer, I said “F it” and maxed out the RAM and put 64GB in it. It’s an AMD with integrated GPU that immediately takes over 2GB RAM – and I still have yet to do anything that has caused it to drop below 50% free memory. It’s exhilarating.

TBF, I spent years on a more memory-constrained laptop and my workflow became centered around minimalism: tiling WM, no DE, mostly terminal clients for everything but the web. When I got the new computer, with wild abandon I tried all the gluttons: KDE, Gnome… you know, all of them. The eye candy just wasn’t worth the PITA of the mousie-ness of them, and I eventually went back to Herbstluftwm and my shells. Now, when I do run greedy apps - usually some Electron crap - what bugs me is the constant CPU suck even at idle, so I find a shell alternative.

I guess it’s an irony that I live in a land of memory plenty and never need more than half what I have available. But I still get a little thrill when I do notice my memory use and I’ve got 70% free. Makes me want to code up a little program with an intentional memory plenty leak, just for fun, y’know?

I’m not sure if you understand what swap actually is, because even machines with 1Tb of RAM have swap partitions, just in case read this post from a developer working on swap module in Linux https://chrisdown.name/2018/01/02/in-defence-of-swap.html

That article is an excellent resource, BTW, thank you. However, it nowhere says anything about swapping being used when you have more memory than you use.

1TB of memory is not a lot, for many applications, so just saying “this guy has 1TB memory and look what he thinks of swap” doesn’t mean much. If he’s processing LLMs or really any non-trivial DB (read: any business DB), then that memory is being used.

Having space in memory so that you never have to swap is always better than needing to swap, and nothing in Chris’ article says anything counter to that. What he mainly argues is that swap is better than OOM killers, having configurations that lead to memory contention in the first place, or seeking alternative strategies to turning off swap.

The fact is, I could turn on swap, but it would never get used because I’m not doing anything that requires heavy memory use. Even running KDE and several Java and Electron apps, I wouldn’t run out of physical memory. I’ll run into CPU constraints long before I run into memory contention issues.

Frankly, if my system allowed me to have, say, 40GB instead of 64, I’d have done that. I only want to not have to use swap - because never using swap is always preferable to needing it - and slightly more than 32GB is where I happen to land. But I can only have symmetric memory modules, and all memory comes in powers-of-2 sizes, and 64GB is affordable.

Again, Chris’ essay says only that swap is better than many alternatives people seek; not that swap is better than being able to not exhaust physical RAM.

As a final point, the other type of swapping is between types of physical memory - between L1 and L2, and between cache and main memory. That’s not what Chris is talking about, nor what the swappiness tuning the OP article is discussing. Those are the swapping between memory and persistent storage.

If he’s processing LLMs or really any non-trivial DB (read: any business DB)

Actually… as a former DBA on large databases, you typically want to minimize swapping on a dedicated database system. Most database engines do a much better job at keeping useful data in memory than the Linux kernel’s file caching, which is agnostic about what your files contain. There are some exceptions, like elasticsearch which almost entirely relies on the Linux filesystem cache for buffering I/O.

Anyway, database engines have query optimizers to determine the optimal path to resolve a query, but they rely on it that the buffers that they consider to be “in memory” are actually residing in physical memory, and not sitting in a swapfile somewhere.

So typically, on a large database system the vendor recommendation will be to set

vm.swappiness=0to minimize memory pressure from filesystem caching, and to set the database buffers as high as the amount of memory you have in your system minus a small amount for the operating system.

So in other words, you paid for 32 GB that you have so far never used.

Yup! And it’s glorious.

It’s far better to have too much, than too little.

Linux can run fine in 2gb with a desktop. I forgot the URL of the page but obligatory Linux ate my ram. (Your ram is used as a cache)