It’s reason not to buy a new PC for me. Or ever upgrade to Windows 11. I’ll wait for 12 or just go Linux.

i never even liked w10 and 11 seemed just horrible. when i heard about the copilot thing last summer, i decided to start getting familiar with linux so that when 10 dies, i won’t have to use 11 and the AI abomination it evolves into. i soon ditched 10 completely after seeing how much nicer mint is. for my school projects i still need windows on my laptop but after that’s over, laptop gets mint as well.

Yeah fortunately I’m familiar with Linux from my work and using it on my Steam Deck.

I’m just incredibly lazy so I’ve been waiting for a reason to pull the trigger, either W10 hitting EOL or a surprise forced upgrade.

While it sounds pretty useless, I do feel vastly more comfortable with the idea of making use of an AI assistant if it’s locally processed. I do try not to just dismiss everything new like a Luddite. That said, so far, despite all the press and attention I haven’t personally found a single use for any of the recent crop of products.and services in the past 3-4 years branded as AI. If however new use cases popup and it becomes a part of our lives in ways we didn’t expect but then can’t live without, I’d very much appreciate it running on my own metal.

I don’t think Windows’ Copilot is locally processed? Could very well be wrong but I thought it was GPT-4 which is absurd to run locally.

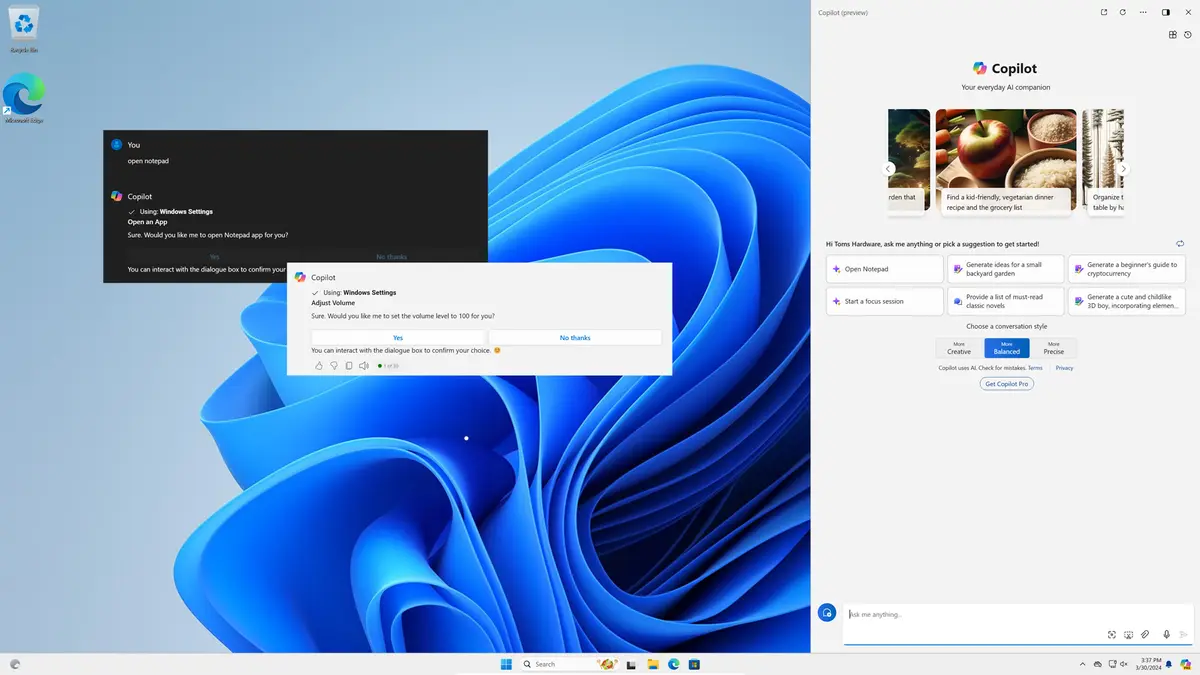

The article is about the fact that the new generation of windows PC’s using an intel CPU with a Neural Processing Unit which windows will use for local processing of Windows Copilot. The author thinks this is not reason enough to buy a computer with this capability.

You’re totally right. I started reading the article, got distracted, and thought I’d already read it. I agree with you then.

I still don’t trust Microsoft to not phone all your inputs home though.

Usually there is a massive VRAM requirement. local neural networking silicon doesn’t solve that, but using a more lightweight and limited model could.

Basically don’t expect even gpt3, but SOMETHING could be run locally.

Ugh so even less reason to think it’s worth anything.

Cortana worked damn well for a while. Feature rich, able to understand me better than googles assistant. And despite this they found no way to earn money on it and just gave up. Not by just disconnecting her, but by slowly stripping her of every useful feature.

I don’t trust copilot to stay around. They gonna try a few half hearted attempts at capitalize it, then just give up. Like they did with Cortana.

Well, they had to let Cortana go before the Rampancy reared its ugly head

They charge for copilot for enterprise applications. Works mostly the same afaik

It’s the same story with Google Assistant. Started out as Google Now and was genuinely useful, but there apparently wasn’t any real way of monetising it so we got Google Assistant/Discover and now Gemini.

They couldn’t figure it out for consumers, but their machine learning systems based around natural language processing are based on mostly the data they gathered from people using Cortana. They just pivoted her to be a business tool instead. She was also the basis for the chat bot AI tools prior to the LLMs. Azure and its offerings are a very interesting beast.

They did eventually capitalize it, they just realized the consumer-facing version wasn’t working so they retooled it for corporate.

deleted by creator

I like copilot. I use Linux on my computer, but I have the bing chat thing in my phone specifically for copilot.

I find it pretty useful and reliable

I have Super+B mapped to open Copilot on my Linux machine, as well as, like you, having the Copilot app on my phone. But I also have Jan mapped to open with Super+J.

I don’t know Jan, should I be looking into it?

If you have a decent computer, it can run pretty capable LLMs on your own computer (without Internet, an account, or censorship) fairly fast

Oh. I didn’t know that. Should I just look at videos on YouTube of how to install LLM on my computer?

I don’t think that’s necessary, just download Jan.

Cool, I’ll definitely look into it, thanks