- 17 Posts

- 54 Comments

1·3 months ago

1·3 months agoWas discussing this with a friend yesterday.

Civitai has a long history of bad moderation.

But bad moderation does not mean that you need stricter moderation.

Rather , civitai’s moderation methods has alienated normal users from engaging with the website in a positive manner.

Give users the ability to generate what they want privately , on the condition they keep it nice and tidy on the front end

and people will have incentive and capacity to restrict themselves in the public space.

Treat people fairly , they open up to you about the problems they see.

Set clear boundries on rules , and users can work the problem for you; ensuring they get what they need without posing problem for the website.

If normal users leave , or become dormant , then there will be nobody to keep the crazies in line.

Referring here to people with kinks and motivations that fall well out of scope of what one normally has to moderate on a webpage.

Once the normal users become inactive , the entire community becomes a madhouse.

Which I think is more or less is what has happened to civitai at this point.

Active users are crazy , the normal users are dormant , moderators have to work their *ss off to regulate everything 24/7 , website becomes a trash heap and nobody cares about anything anymore.

2·9 months ago

2·9 months agoMy solution has been to left click , select “inspect element” to open the browsers HTML window.

Then zoom out the generator as far as it goes , and scroll down so the entire image gallery (or a part of it at least) is rendered within the browser.

The ctrl+c copy the HTML and paste it in notepad++ , and use regular expressions to sort out the image prompts (and image source links) from the HTML code

Not exactly a good fix , but it gets the job done at least.

3·9 months ago

3·9 months agoBig ask.

Personally , I’d be overjoyed to just have embeddings available on the site.

1·9 months ago

1·9 months agoIt is good that you ask :)!

Read this: https://arxiv.org/abs/2406.02965

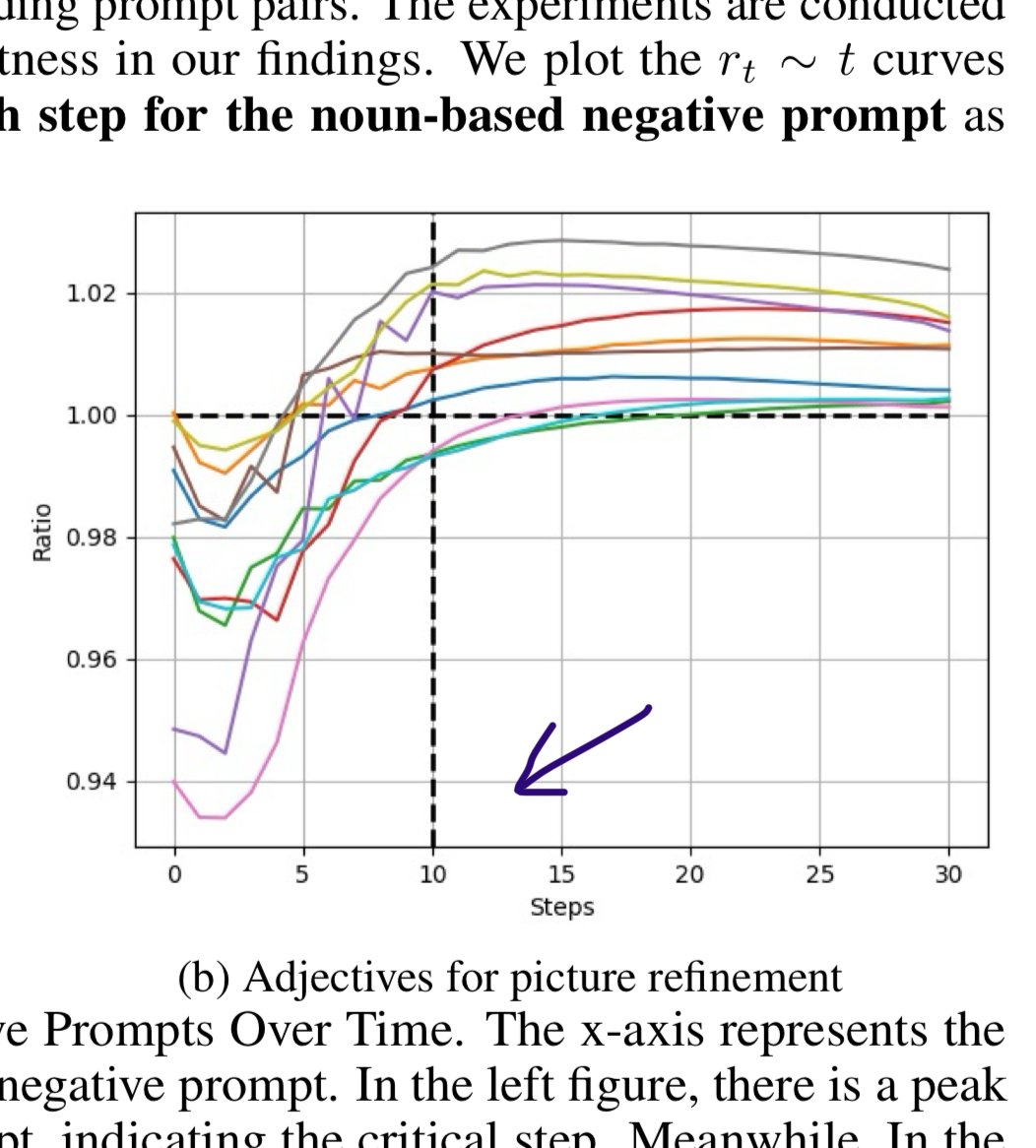

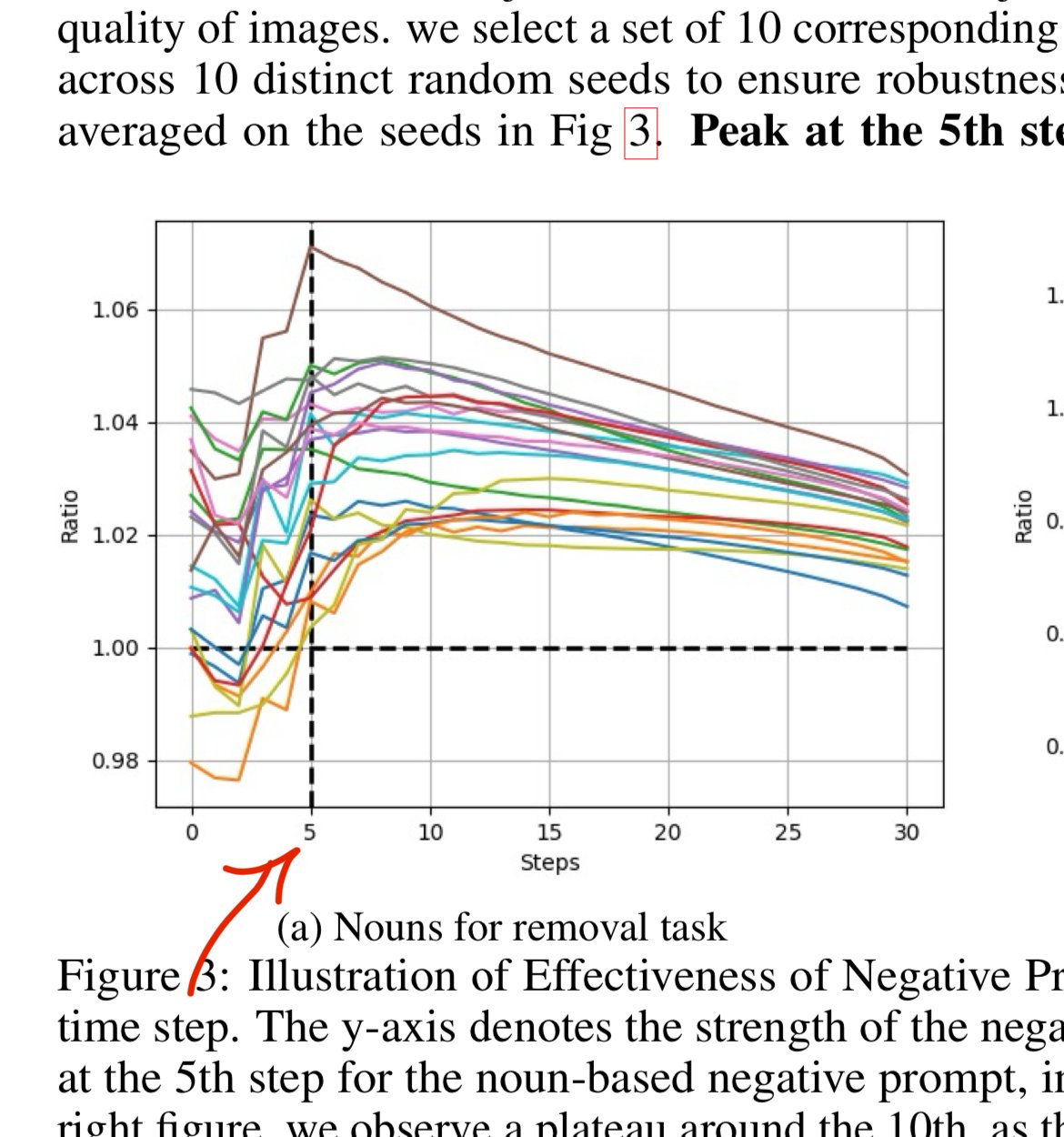

The tldr:

Negatives should be ‘things that appear in the image’ .

If you prompt a picture of a cat , then ‘cat’ or ‘pet’ can be useful items to place in the negative prompt.

Best elimination with adjective words is with 16.7% delay , by writing \[ : neg1 neg2 neg3 :0.167 \] instead of neg1 neg2 neg3

Best elimination for noun words is with 30% delay , by writing “\[ : neg1 neg2 neg3 :0.3 \]” in the negative prompt instead of "neg1 neg2 neg3 "

3·10 months ago

3·10 months agoI appreciate you took the time to write a sincere question.

Kinda rude for people to downvote you.

1·10 months ago

1·10 months agoSimple and cool.

Florence 2 image captioning sounds interesting to use.

Do people know of any other image-to-text models (apart from CLIP) ?

2·10 months ago

2·10 months agoWow , yeah I found a demo here: https://huggingface.co/spaces/Qwen/Qwen2.5

A whole host of LLM models seems to be released. Thanks for the tip!

I’ll see if I can turn them into something useful 👍

2·10 months ago

2·10 months agoThat’s good to know. I’ll try them out. Thanks.

1·10 months ago

1·10 months agoHmm. I mean the FLUX model looks good

, so there must maybe be some magic with the T5 ?

I have no clue, so any insights are welcome.

T5 Huggingface: https://huggingface.co/docs/transformers/model_doc/t5

T5 paper : https://arxiv.org/pdf/1910.10683

Any suggestions on what LLM i ought to use instead of T5?

2·10 months ago

2·10 months agoGood find! Fixed. It was well appreciated.

2·10 months ago

2·10 months agoFair enough

3·10 months ago

3·10 months agoI get it. I hope you don’t interpret this as arguing against results etc.

What I want to say is ,

If implemented correctly , same seed does give the same result for output for a given prompt.

If there is variation , then something in the pipeline must be approximating things.

This may be good (for performance) , or it may be bad.

You are 100% correct in highlighting this issue to the dev.

Though its not a legal document , or a science paper.

Just a guide to explain seeds to newbies.

Omitting non-essential information , for the sake of making the concept clearer , can be good too.

1·10 months ago

1·10 months agoPerchance dev is correct here Allo ;

the same seed will generate the exact same picture.

If you see variety , it will be due to factors outside the SD model. That stuff happens.

But it’s good that you fact check stuff.

1·10 months ago

1·10 months agoDo you know where I can find documemtation on the perchance API?

Specifically createPerchanceTree ?

I need to know which functions there are , and what inputs/outputs they take.

2·10 months ago

2·10 months agoThanks! I appreciate the support. Helps a lot to know where to start looking ( ; v ;)b!

1·10 months ago

1·10 months agoNew stuff

Paper: https://arxiv.org/abs/2303.03032

Takes only a few seconds to calculate.

Most similiar suffix tokens : "vfx "

most similiar prefix tokens : “imperi-”

1·10 months ago

1·10 months agoI count casualty_rate = number_shot / (number_shot + number_subdued)

Which in this case is 22/64 = 34% casualty rate for civilians

and 98/131 = 75% casualty rate for police

61·10 months ago

61·10 months agoSo its 64-131 between work done by bystanders vs. work done by police?

And casualty rate is actually lower for bystanders doing the work (with their guns) than the police?

Feel free to try Joycaption for captioning : https://lemmy.world/post/30096816