- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

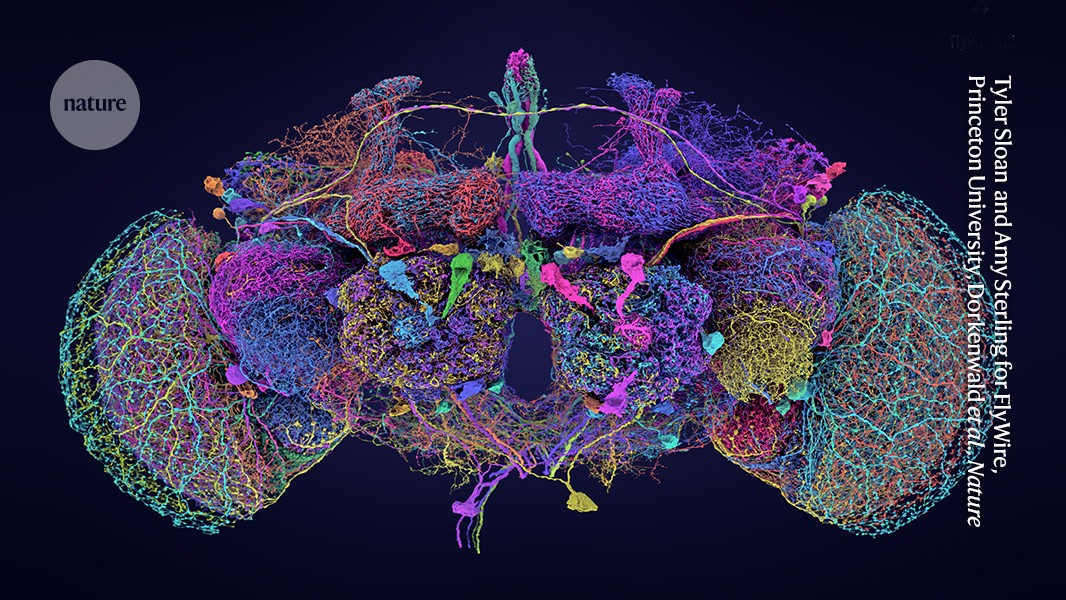

"… Researchers are hoping to do that now that they have a new map — the most complete for any organism so far — of the brain of a single fruit fly (Drosophila melanogaster). The wiring diagram, or ‘connectome’, includes nearly 140,000 neurons and captures more than 54.5 million synapses, which are the connections between nerve cells.

… The map is described in a package of nine papers about the data published in Nature today. Its creators are part of a consortium known as FlyWire, co-led by neuroscientists Mala Murthy and Sebastian Seung at Princeton University in New Jersey."

See the associated Nature collection: The FlyWire connectome: neuronal wiring diagram of a complete fly brain, which also has links to the nine papers

All nine papers are open access!

The team was surprised by some of the ways in which the various cells connect to one another, too. For instance, neurons that were thought to be involved in just one sensory wiring circuit, such as a visual pathway, tended to receive cues from multiple senses, including hearing and touch1. “It’s astounding how interconnected the brain is,” Murthy says.

Could this explain synesthesia better or any other similar condition(?)?

Well there is a phenomenon where your brain will make sound and sight “match up” even when it shouldn’t. Like if you hear and see a basketball bouncing 75 meters away, the sound should have about a 0.25 second delay. But your brain will make you perceive the sound as happening simultaneously with the ball hitting the ground, despite the fact that you could not perceive the visual and auditory sensations simultaneously. If you go further away with the ball, eventually there is a threshold where your start perceiving the delay. The auditory and visual sense would need to be somehow linked for this phenomenon to happen I’d say.

I guess it’s the brains way of matching visual and auditory cues to try to make a better picture of the world. The brain is basically saying “that sound came from the ball” and you don’t even need to think consciously to know that.

There’s also really tight coordination between sight and proprioception, as our visual processing seamlessly stitches together visual information into a three dimensional model of reality, even if we’re moving while taking in that visual information, through stereoscopic signals from two eyes.

OK, that makes a lot of sense (pun intended af). Thank you!

So can we model this now?

Can we use this data to essentially emulate a fruit fly’s behavioral patterns?

Like can we just wire this up in a software neural network, feed it some inputs, and see what happens?

As far as I understand, not really, as neural networks are more of a metaphor than an analogue. They don’t have a one to one correspondence to brain neuron behavior.

In a physical (as in physics) sense, it’s because software neural nets are inherently digital, whereas actual neurons function in the analog (in terms of electrical impulses, as well as chemically) domain. We don’t have tech to accurately and effectively represent all of that.

Audio is inherently analogue, but you can record it into digital formats just fine.

It’s tempting to say “well, that’s different though” but it really isn’t.

Just like with audio, you’ll need high enough fidelity encoding to make it all work, otherwise you end up with garbage.

Based on my understanding of how these things work: Yes, probably no, and probably no… I think the map is just a “catalogue” of what things are, not at the point where we can do fancy models on it

This is their GitHub account, anyone knowledgeable enough about research software engineering is welcomed to give it a try

There are a few neuroscientists who are trying to decipher biological neural connections using principles from deep learning (a.k.a. AI/ML), don’t think this is a popular subfield though. Andreas Tolias is the first one that comes to my mind, he and a bunch of folks from Columbia/Baylor were in a consortium when I started my PhD… not sure if that consortium is still going. His lab website (SSL cert expired bruh). They might solve the second two statements you raised… no idea when though.

They have a picture/ model / skeleton but can’t simulate the data that flows through those structures yet.

Well there is no “data” per se, there’s voltages and a wiring map. And this article is talking about having the complete wiring map.

The neurons deliver electrical pulses across synapses. The thickness and length of the synapse can affect the voltage or amplitude transmitted across to the next neuron. And again, if we have this fairly complete map of synapses, we may have enough information to calculate the electrical outputs of each neuron when it fires.

My understanding is that neurons work something like transistors, they receive signals and when triggered by a strong enough signal, or by enough simultaneous signals, that neuron will also fire and transmit down its synapses. With this alone you absolutely have enough structure for very complex decision making, much like a microprocessor.

I guess the question is really how accurate is this map? If we have a clear enough picture of every synaptic connection, we could simply simulate behavior in software…

Very cool!

We still lack a general theory of neural activity, while mountains of data continue to accumulate. I hope we get closer with his effort.