A body camera captured every word and bark uttered as police Sgt. Matt Gilmore and his K-9 dog, Gunner, searched for a group of suspects for nearly an hour.

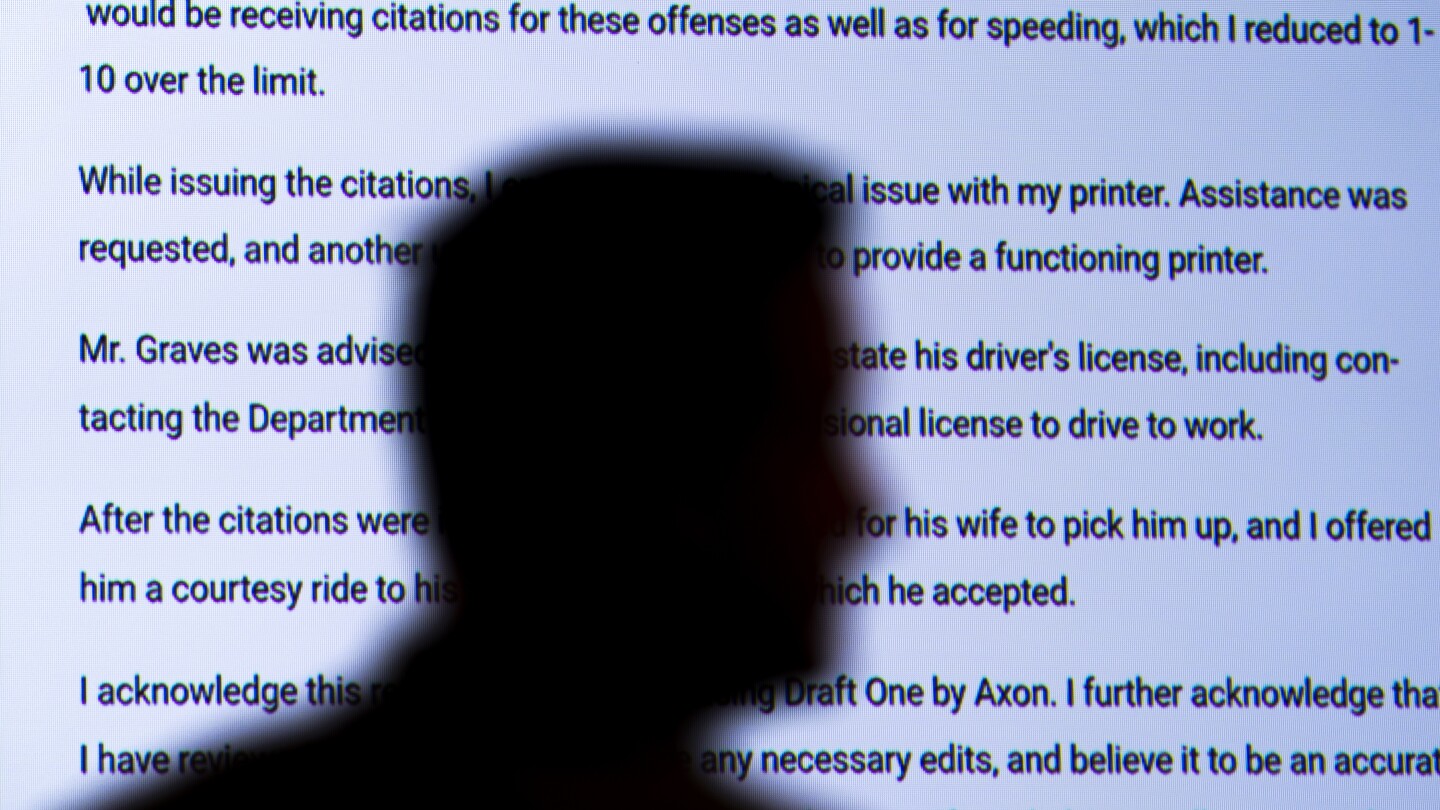

Normally, the Oklahoma City police sergeant would grab his laptop and spend another 30 to 45 minutes writing up a report about the search. But this time he had artificial intelligence write the first draft.

Pulling from all the sounds and radio chatter picked up by the microphone attached to Gilbert’s body camera, the AI tool churned out a report in eight seconds.

“It was a better report than I could have ever written, and it was 100% accurate. It flowed better,” Gilbert said. It even documented a fact he didn’t remember hearing — another officer’s mention of the color of the car the suspects ran from.

The rules of evidence place a lot (honestly an unreasonable amount) of weight on the value of eyewitness evidence, and contemporaneous reports made from the same. The question for the courts will be, does an AI summary of a video recording have the same value as a human-written report from memory?

I agree that this is good use of AI, but would suggest that th courts should require an AI report to basically have the body cam recording stapled to it, ideally with timestamped references in the report. AI transcriptions are decent, but not perfect, and in cases where there could be confusion the way the courts treat these reports should allow for both parties to review and offer their own interpretations.

This would make sense to include as something that is supplemental to the officers report to help corroborate facts. The question becomes what would happen when the AI summary and officers report conflict

Or when the llm hallucinates shit that didn’t happen?

The biggest problem with this is… who do you blame when it doesn’t work?